For a long time, Turkish models climbed leaderboards written somewhere else—translated datasets, English‑first assumptions, noisy web scrapes. Useful, yes. Representative of real Turkish? Not quite. TrGLUE is our answer: a native, human‑verified GLUE‑style benchmark purpose‑built for Turkish. In this post, we’ll say a friendly hello, then go straight into the tags: what’s in the suite, how we built and verified it, how we cleaned it, and how a strong baseline (BERTurk) actually fares. If you evaluate Turkish models, this is designed to be something you can trust—and replicate.

Design principles

Let’s start with the philosophy. We mirrored what works from GLUE but made calls that respect Turkish as it is written and read.

Native by default. Wherever possible, inputs come from human‑written Turkish sources. Not machine‑translated, not “cross‑lingual if you squint,” but genuinely Turkish. The goal is that examples feel natural to Turkish speakers and surface morphology and pragmatics in a way translated datasets can’t.

Verified labels, not vibes. We do use LLMs for weak labels where that speeds up throughput, but the final say comes from humans. Professional annotators (through Co-one) verify, spot‑check, and adjudicate disagreements. The process emphasizes uncertainty visibility: if a label is borderline, it gets flagged, discussed, and either clarified or discarded. The result is fewer hidden landmines in your eval set.

Transparent, task‑appropriate metrics. Every task declares its metric(s) up front—Matthews for acceptability (because class imbalance matters), Accuracy/F1 for the binary classifiers, Pearson/Spearman for similarity, matched/mismatched accuracy for MNLI. No surprises, no metric fishing.

Clean data and repeatable hygiene. Deduplication, leakage checks, and morphology‑aware preprocessing aren’t “nice to have”—they’re the foundation. Turkish tokenization gets special handling (e.g., apostrophes in proper names plus suffixes). You should be able to rerun the pipeline and get the same hashes.

Task suite at a glance

TrGLUE groups tasks into the usual families, but with Turkish‑specific care in sourcing and labeling. Here’s how to think about them:

Single‑sentence tasks

- TrCoLA (acceptability): This is your syntax/pragmatics stress test. We report Matthews correlation, which is robust when “acceptable” vs “unacceptable” isn’t perfectly balanced. Expect morphology and negation to bite; that’s by design.

TrSST‑2 (sentiment): This is your classic positive/negative sentiment on native Turkish snippets, indeed movie reviews. Accuracy and F1 are both reported, so you can see both overall hit‑rate and class balance handling. Similarity and paraphrase

- TrMRPC (paraphrase): Sentence pairs with a yes/no paraphrase label. Accuracy and F1 tell you whether your model is just pattern‑matching or genuinely capturing paraphrase variability—especially with light‑verb constructions.

- TrSTS‑B (similarity): Continuous similarity judgments with Pearson and Spearman correlations. This one is small and carefully curated; we only release dev for now, so report on dev and expect higher variance until a test set is available.

TrQQP (paraphrase): Question pairs “in the wild,” a different domain and style from MRPC. High scores here don’t guarantee MRPC success, which is exactly why both are in the suite. Inference

- TrMNLI (NLI): The workhorse. We report matched and mismatched accuracy so you can see how your model generalizes across domains/registers.

- TrQNLI (QA/NLI): Question understanding reframed as NLI. It’s a good proxy for how well your model connects world knowledge with Turkish syntax.

- TrRTE (NLI): Recognizing textual entailment at a smaller scale, with fully human‑written hypotheses. Accuracy is the headline, but the examples are crafted to be diagnostic.

Below are some examples from the above datasets:

Soluklu [ph] ve Soluksuz [p] sesleri Türkçede serbest dağılım içindedir sözcük sonunda. / unacceptable CoLA

İnandırıcılıktan oldukça uzak yapay bir fotoğraf sanatçılığı üzerine kurulmuş senaryosuyla, üçlü lezbiyen ilişkiyi kötü oyunculukla anlatamayan oldukça sıradan bir film. 2/10 / negative SST-2

Meme kanseri ile ilgili merak edilen 5 soru / Kadınlarda sık görülen meme kanseri, erkeklerde de görülebiliyor / not equivalent MRPC

Adam ata biniyor. / Bir adam ata biniyor. / 5 STS-B

Burçlar ne kadar aşıkmış? / Kedim çok hasta, ne yapmalıyım? / not duplicate QQP

Egomanyak kadinin teki hic giresim gelmiyor. / Pizzalar harika görünüyor! / neutral / MNLI

Londra'da ve Barselona'da İstanbul'a kıyasla kaç müze var? / Kültür ve Turizm Bakanlığı, 2009'da İstanbul'da 69 müze olduğunu tahmin ediyor, bu sayı Londra'da 76 ve Barselona'da 51'dir. / entailment / QNLI

Yayımlanmış çok sayıda eseri ile sayısız ödüle layık görüldü. / Yazar birçok ödül almıştır. entailment / RTE

Construction notes and exceptions

We didn’t blindly mirror GLUE—there are places where Turkish needed a different touch.

TrRTE is entirely human‑written on the hypothesis side, following linguist‑designed guidelines. That matters when subtle entailments, negations, or evidentials (‑miş) make or break the label. Linguists also adjudicated disagreements, so borderline examples don’t slip through.

TrCoLA is hand‑crafted. No LLM text generation. The aim was to build items that probe the gray areas Turkish models stumble on: agreement mismatches, clitics, negation scope, and oddities in non‑finite verb chains.

TrSST‑2 labels come from ratings with human spot‑checks. That keeps the label grounded in real user sentiment while filtering obvious sarcasm/misattribution pitfalls.

TrSTS‑B uses a translate‑then‑edit approach. Humans corrected MT outputs to sound naturally Turkish and culturally appropriate, while preserving the intended similarity label. Because the set is small (by design—quality over quantity), we do not release a test split yet. Evaluations are conducted on the dev split. Plan for some variance; we’ll expand this to a full test set as it grows.

Labeling and verification workflow

Here’s the high‑level loop we run, and why it helps you trust the result.

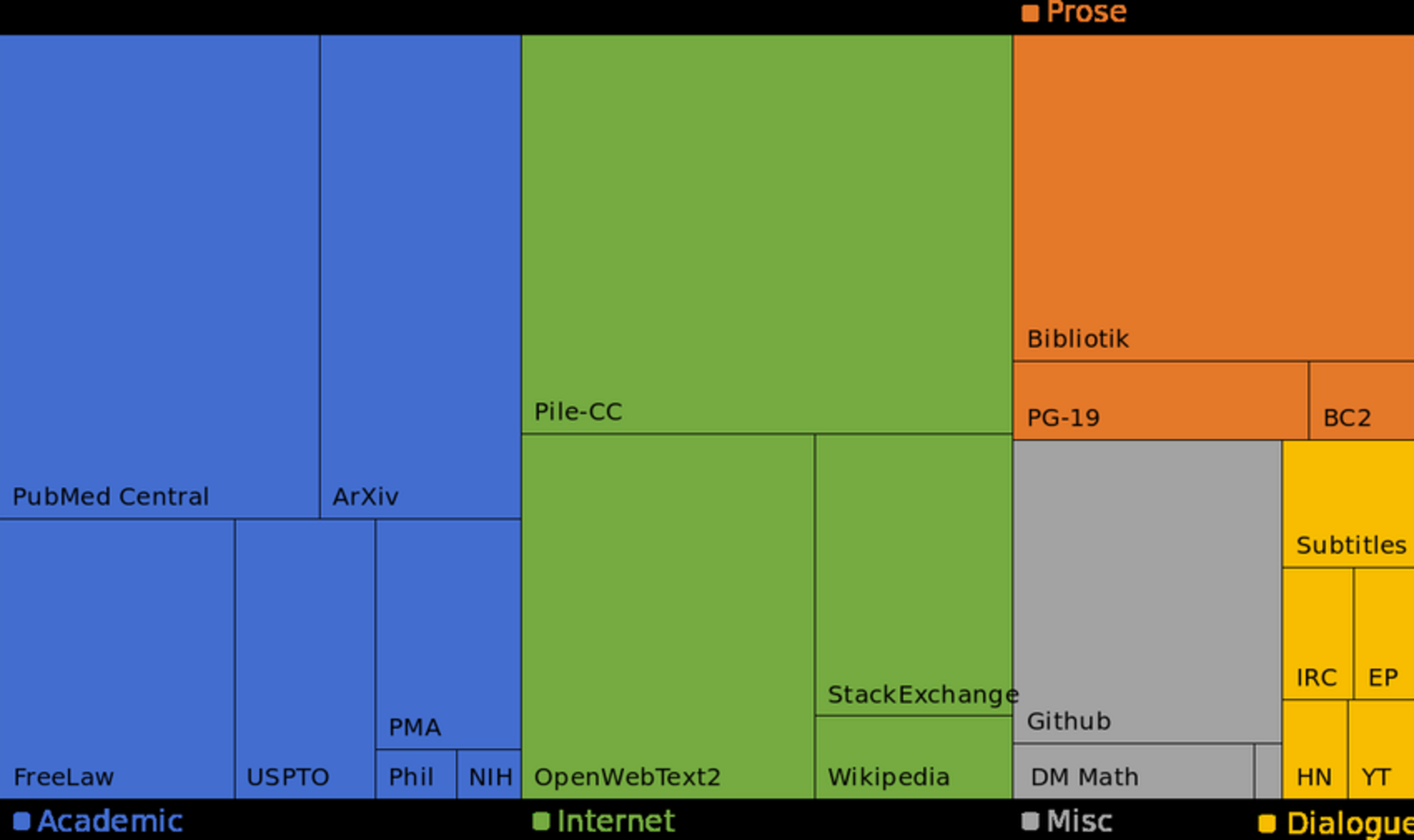

Source and weak labeling: We pull native Turkish sources or construct pairs according to task guidelines. Where it makes sense, our LLM provide weak labels to speed up triage—especially helpful for large pools where human time is precious. Throughout our work, LLM of our choice is Snowflake Arctic due to its permissive licencing.

Human verification via Co-one: Professional annotators review labels with explicit instructions, run consistency checks, and flag “uncertain” items. Disagreements get adjudicated, not hand‑waved. The mantra is: uncertainty visible beats uncertainty buried.

Finalization and splits: We deduplicate aggressively (near‑dupes included), run leakage checks across train/dev/test, and format splits with unambiguous metadata. For TrSTS‑B, we stop at dev to avoid over‑promising reliability on a tiny test.

We kept preprocessing practical, but mindful of Turkish specifics.

spaCy Turkish for lemmatization and morphosyntax: We lean on the Morphologizer for statistical morphological tagging. It’s not magic, but it’s a consistent baseline and helps with audits and sanity checks.

Turkish‑aware tokenization: Proper names with suffixes (İstanbul’a, Ankara’nın) keep apostrophes inside the token to avoid entity fragmentation. That small choice makes a big difference in downstream entity‑heavy tasks.

Deduplication and leakage audits: We remove exact and near duplicates, strip off‑language stragglers, and verify that nothing sneaks across splits. The aim is that evaluation is about your model, not our leakage.

Baseline results of BERTurk

A familiar, strong baseline helps ground expectations. Here’s how BERTurk performs out of the box on TrGLUE:

| Task | Metric(s) | Score |

|---|

| TrCoLA | Matthews corr. | 42.0 |

| TrSST-2 | Accuracy / F1 | 87.4 / 91.3 |

| TrMRPC | Accuracy / F1 | 74.4 / 72.7 |

| TrSTS-B | Pearson / Spearman (dev) | 71.3 / 69.9 |

| TrQQP | Accuracy / F1 | 95.4 / 94.3 |

| TrMNLI | Matched / Mismatched accuracy | 87.9 / 90.8 |

| TrQNLI | Accuracy | 90.6 |

| TrRTE | Accuracy | 92.2 |

Let’s read these numbers like practitioners, not just scoreboard watchers.

QQP vs MRPC split personality: The model looks very strong on TrQQP, which suggests it handles everyday question paraphrases well. But TrMRPC is more demanding—light‑verb alternations (etmek/yapmak), derivational morphology, and register shifts reduce F1. If your product leans on paraphrase detection, test both; success on one doesn’t guarantee the other.

MNLI mismatched > matched: That’s interesting. It often points to domain/register quirks: training fits one style too tightly, generalizes better to another, or the mismatched set aligns more with pretraining distribution. Worth an error dive.

STS‑B is promising but volatile. Correlations in the low 70s are solid for Turkish at this size. Because it’s dev‑only for now, try multiple seeds and report mean/std. Expect stability to improve as we expand and release a test set.

CoLA remains hard by design. A Matthews of 42 tells us the model still misses acceptability boundaries triggered by negation scope, evidential past (‑miş), and agreement. If you’re iterating on morphology‑aware tokenization or pretraining, CoLA is where you’ll see payoff.

Common error modes we track

If you want to squeeze more performance, these are the potholes to patch first.

Morphology gotchas: Negation (‑ma/‑me) and evidentiality (‑miş) flip meanings subtly; models over‑index on surface cues and miss scope. Agreement mismatches are another frequent source of false acceptability.

Light verbs and derivations: Turkish paraphrases often pivot on etmek/yapmak constructions and derivational morphology. Models that memorize phrasings struggle to generalize across these.

Case‑marked named entities: Tokens like “İstanbul’a” bundle an entity with a role. Segmenting it wrong can break both entity and syntax understanding. This shows up in paraphrase and NLI when roles shift.

Register shifts: Colloquial phrasing vs formal rewrites can change entailment strength without obvious lexical overlap. If your training data is style‑narrow, you’ll feel the pain here.

How to use TrGLUE

Getting started should be dull—in the best way, use our official evaluation script in TrGLUE Github repo.

Load a split, run the right metric, report results. Each task clearly states its metric(s). For TrSTS‑B, report on dev (no public test yet) and include your random seed strategy.

Start with BERTurk, swap your model. BERTurk gives a realistic anchor for Turkish. From there, you can test multilingual LMs, adapters, or domain‑tuned variants and see real differences.

Share results and artifacts. We keep a community leaderboard and welcome PRs with configs, seeds, and checkpoints. If you catch an annotation edge case, open an issue—we’d rather fix it than hide it.

If you’ve been evaluating Turkish models on translated or generic web data, TrGLUE is your upgrade path. It’s native, human‑verified, and built to reflect how Turkish is actually used. Try the suite, share your numbers, and let’s push Turkish NLP forward together.

For the full dataset construction details you can always visit the full research paper.

Acknowledgments

Huge thanks to our annotators and to Co‑one for professional verification and uncertainty‑aware evaluation support. It takes care—and people—to build a benchmark that respects Turkish. Second place goes to Snowflake, kudos to them for offering Arctic freely. Community feedback, error reports, and contributions are very welcome.